AI Data Control Planes and Omnichannel Personalization

In the marketing world we're living in, getting omnichannel personalization right is the real deal, but it’s super hard. It's all about making the content feel like a cozy chat with each customer, knowing what ticks for them - and making sure the data and creative content get orchestrated into the right buckets for delivery. This article takes you on a ride through the marketer’s turf and some of the IT /Dev turf - because, as you saw in previous articles, - they both need to collaborate to pull off the hardest aspect of marketing ever devised.

I know I sound like a broken record. Still, the perspectives and nuances are different in some ways, yet there are newer innovations I will illustrate that other authors have alluded to outside of marketing. Let's converge some of them together.

Cracking the Omnichannel Personalization Code

Before we jump in, let's get the hang of what omnichannel personalization is all about. It’s like being at a party and having cool, seamless chats with friends, whether they’re next to you or texting from across the room. We have 3 other articles here, here, and here. I discussed healthcare and life sciences two of them, but it could be any industry. Healthcare and LS are just woefully behind. Retailers, on the other hand, have been specializing in Personalization on the website, mobile app, email, and, in some cases, pushed offers to the call center. The issue is that those have all been multi-channel approaches. Only recently, 2 years or so, have they slowly incorporated fully coordinated the campaigns across those channels - omnichannel - My opinion is it is still very loose and not well managed by any organization based on my experiences in the field with 100s of clients.

Unifying the customer profile has helped this along in a limping manner. The CDP, while not a silver bullet as some have said, still helps move the needle. All industries are still struggling. So let's dig in and see where the next opportunity to coordinate the profile in a more controlled approach might happen. I am going to propose more product functionality automation to abstract and make the workflow simpler and help IT/Dev and Data science teams talk and help the Business teams gain much more value from AI and ML while leveraging their existing investments.

The Significance of Data Workflows

Data is the lifeblood of personalization. Personalization remains an elusive goal without relevant, timely, and accurate data. I discussed data strategies, data management, and Identity in a few other articles. If you read through those, some of the steps below are abbreviations of those articles. The steps are the same, but their naming may differ slightly in this article to provide more clarity and less technical jargon.

Here's how a structured data workflow can empower Omnichannel Personalization marketers:

Data Collection: The first step in the workflow is gathering information. It's crucial to have tools and systems to collect data from many sources, from online interactions, behavioral analytics, offline touchpoints, and more. There is so much content(videos and articles) out there on this stage and so many vendors and tools, it's hard to tell how to approach it. In fact, I have been working on other articles on the options you have in this area to reduce your costs dramatically. The tools, connectors, and integrations are so pervasive there is no reason to pay $100's of thousands to millions in data collection event fees. I am creating ideas around building your own data collection tools with distributed scalable frameworks like AKKA, possibly WebAssembly, coupled with serverless solutions or using off-the-shelf Open Source tools like https://jitsu.com/ or other tools like Keeboola, or Estuary that are much cheaper than your traditional web tag management tools. The idea of potentially creating your own hybrid approach is directly tied to your data pipelines, where Snowflake and Databricks provide the foundation. There are many vendors in the CDP market already bundling this with their platform so you do not get double/tripled billed.

Data Integration and Data Pipeline: Once collected, data must be integrated to provide a unified view of the customer. Marketers must understand a customer's journey, from their first interaction with the brand to their most recent purchase. Again this is an area heavily neglected in the CDP and Martech stack. Lineage of data and how it flows end to end is critical, especially for governance and auditing.

There are dozens of these newer data management tools not exposed inside the CDP's Insight capability, so they are often hidden behind IT's covers. dBT, Estuary, Keboola, Airflow, and others I mentioned in my other articles are all buried in the data stack, and the business has no insight or trust of the data they are using. I believe the flows from these tools can be abstracted and surfaced across the organization in a hybrid/homegrown way; some Open source tools like Zingg.ai can help with profile deduplication within Snowflake and Databricks while there are many other similar examples - the business needs comprehensive view into the data flow - a new interface, dashboard or even what I propose in the article below; an AI/Data Control plane.

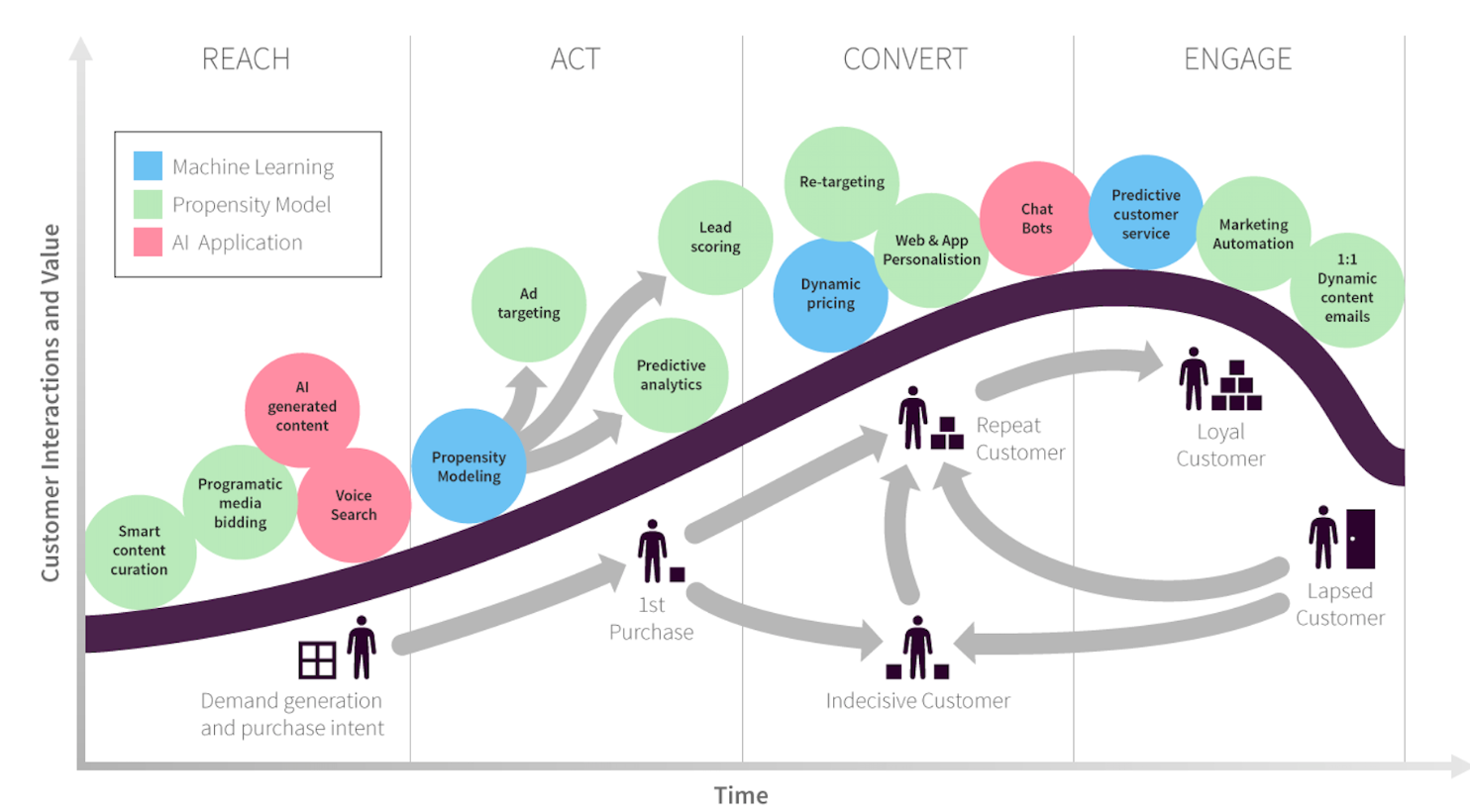

Data Analysis: With integrated data, marketers can segment their audience based on different criteria, such as purchase history, behavior, or demographics. This segmentation forms the backbone of personalized content and recommendations. AI is a core tenet of this layer. Models like Market Basket Analysis, Root Cause Analysis, RFME(Recency, frequency, monetary engagement), Churn, Look-alike, Next-Best Offer, and Propensity are part of this stage. In reality, the CDPs are now taking on this role. The CDP Institute has classified CDPs into different categories, but in reality, this is where all CDPs should have the basic cores of these areas. I have a checklist in a previous article about other tools a CDP might have when coupled with the Data integration part of the platform.

Data Activation: After segmentation, the data is activated by deploying it across various channels. This ensures that the personalized content reaches customers wherever they interact with the brand. Again this is a part of the CDP but also separated downstream further into the traditional Martech delivery solutions like Adtech, Onsite/on-property Personalization, Loyalty, and automation tools. They all have Personalization automation but often silo their profiles and do not share well across the stack. What do they need to do to provide personalization? – What components comprise a solid personalization strategy?

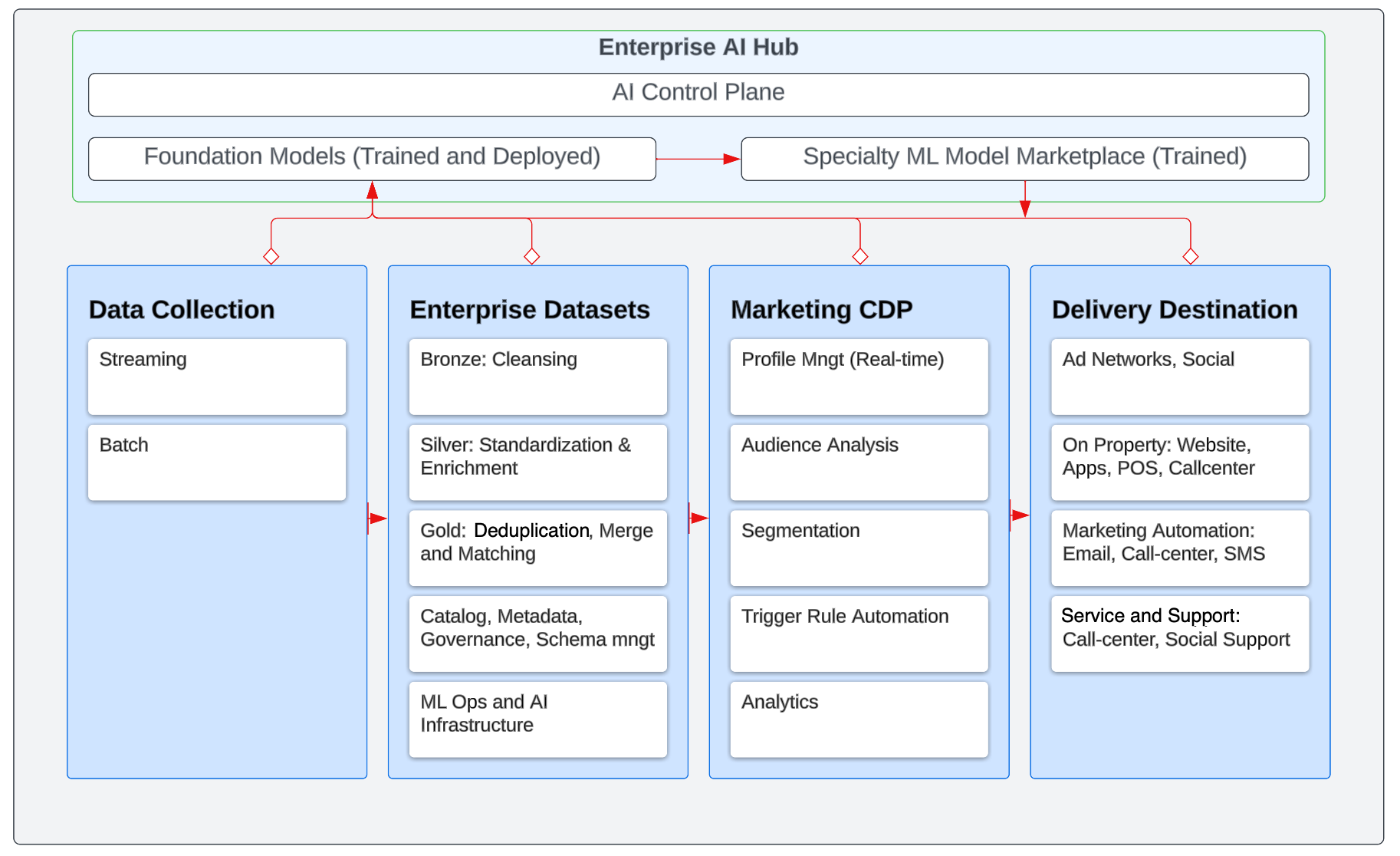

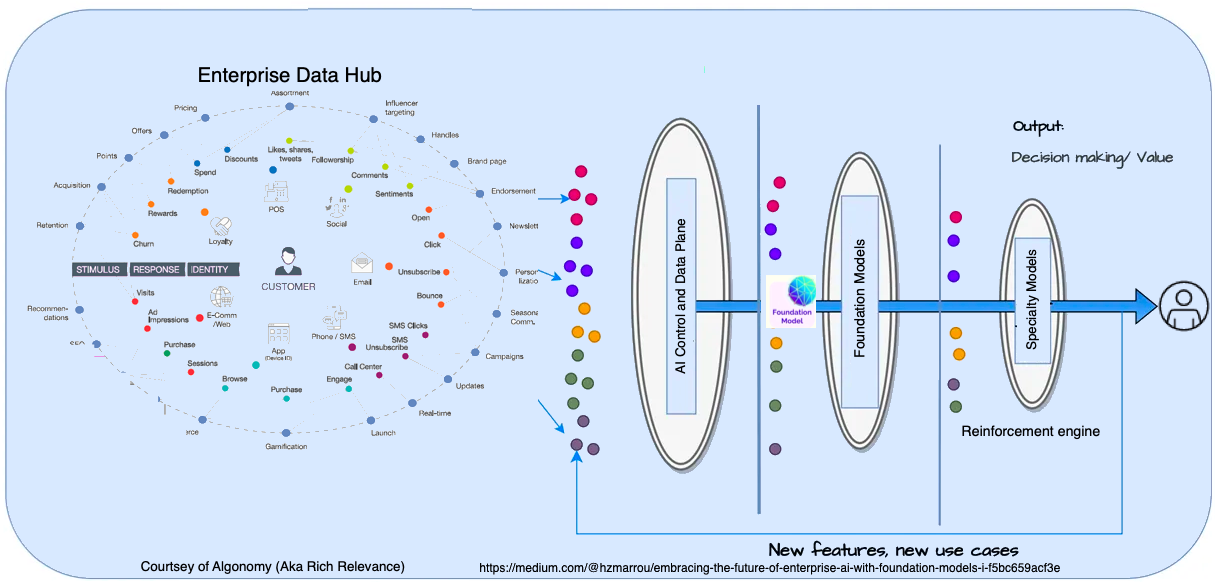

A lot is missing in the diagram below, but it aligns with the other illustrations I have shared in the past articles. The approaches and concepts are consistent. What is new is the AI Control and Data Plane. I explain this more below.

Omnichannel Personalization

Achieving the right level of personalization requires careful planning and execution. There are themes marketers can employ in the campaigns for specific journey touch points. Each campaign would then be associated with a set of strategies. Typically, it is in the form of machine learning models. The models are then placed into a library and stacked, and ranked based on the touch point. Each touch point might have 2-10 model strategies assigned. The Personalization engine then chooses the right strategy at the right time for the customer profile context. It uses inputs from the following questions: Did they buy something yesterday or last week? How much did they buy?, What did they buy?, When was the last time they visited?, and What did they search for, browse, or click on? These are called RESPONSES to the CAMPAIGN STIMULUS by the CUSTOMER IDENTITY. Since all of these data attributes are inputs into the model strategies decision process, there needs to be marketing themes to drive the models for personalization. I've detailed a few personalization theme use cases below and illustrated them with a Customer profile and a company to give the ideas more color.

Use Case Themes:

- Abandonment and Reengagement

- Content Personalization

- Email Capture Strategies

- Journey Personalization

- Product Discovery

- Promotional Messaging

- Recommendations

- Triggered Messaging

- Urgency and Demand Strategies

Let's look at some Omnichannel Personalization themes and use a sportswear retailer, "SportsFlex," and a consumer, "Jane." – Sportflex is looking to engage their customers more effectively across their Omnichannel initiatives - assume Sportflex has 60M customer profiles in their Enterprise Data hub – imagine how difficult this becomes with this many profiles to personalize 1:1 - then have enough creative images, creative content fragments, and messages in a DAM connected to a CMS/WMS to assemble at the instant touch-point of the user in that profile universe. These themes will seem non-coordinated - later in the article, we will tie some themes together for Jane to show or illustrate full Omnichannel.

Use Case Themes:

Journey Personalization:

- Theme: Creating a tailored path for Jane that matches her interests and habits, ensuring she feels catered to every step of the way.

- Illustration: When Jane logs in to SportsFlex after a long day, the homepage showcases her recent searches, such as "yoga mats" and "Pilates gear." Additionally, the navigation bar has a section titled "Yoga & Pilates" given her frequent visits to these sections. If Jane came to SportsFlex from a yoga blog, an on-site welcome message might say, "Welcome Yoga Enthusiast! Explore our latest yoga collections."

Product Discovery:

- Theme: Ensuring Jane quickly and effectively finds products she loves, enhancing her shopping experience.

- Illustration: As Jane browses the yoga section, products are listed in order of her previous purchase patterns – so yoga mats of her favorite color, teal, might be at the top. A sidebar also pops up, challenging her to a fun quiz: "Discover Your Yoga Style!" Based on her answers, she gets product recommendations like bohemian-patterned yoga pants or a minimalistic yoga block set, making product discovery engaging and personal.

Promotional Messaging:

- Theme: Sending Jane relevant offers and promotions tailored to her preferences, ensuring she feels valued and increasing the likelihood of a purchase.

- Illustration: Knowing Jane's from Miami and that she frequents the yoga section, she gets a mobile notification: "Sunset Yoga Sale! Special discounts for our Miami yogis – 20% off all yoga gear until sunset!" She might also get a location-based message near a physical store: "Passing by? Drop in for an exclusive in-store discount on our latest yoga collection."

Urgency and Demand:

- Theme: Inducing swift decision-making by Jane through strategies that highlight the demand for products or create a sense of time-sensitive urgency.

- Illustration: While Jane's browsing yoga mats, she notices a banner on a teal-colored mat she likes: "Only 3 left in stock!" Simultaneously, a timer on the bottom corner counts down: "Hurry! 20% discount ends in 01hr:23min" Such strategies nudge her to make a quick purchase, fearing she might miss out on her desired item or a fantastic deal.

For "SportsFlex," these themes aren't tactics – they're the backbone of a comprehensive omnichannel data-driven initiative, ensuring Jane's experiences are consistent, personal, and engaging across all touchpoints. There are 100s of tactics within each Strategy theme. By marrying data insights with thoughtful execution, "SportsFlex" crafts a shopping journey for Jane that feels almost bespoke, driving brand loyalty and revenue. We use the Themes and tie them to specific personalization model strategies noted next and below. These models get built out specifically to each user profile 1:1. These are the models in the library that the personalization engine uses to make the right decision to handle our Omnichannel dream and goal.

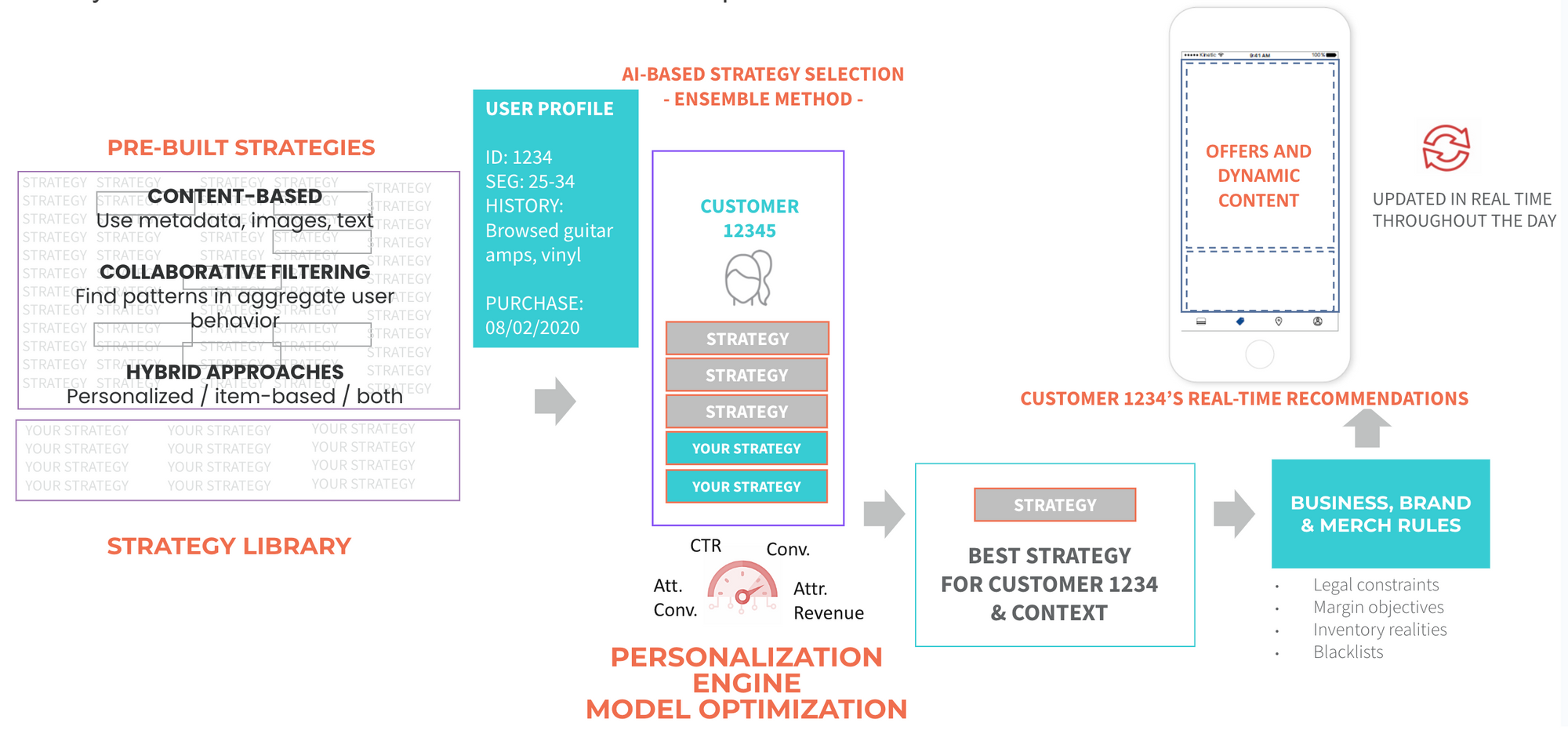

Two vendors that were and are at the forefront of this multi-model Machine learning approach are Dynamic Yield and Rich Relevance (Now part of Algonomy). There are other newer vendors, and my idea of a layer above the specialty model strategies is the next evolution, leveraging the newer LLM(Large Language models), now called Foundation models, as inputs to the personalization specialty model library. Even if the older vendors mentioned are behind the 8-ball, they could easily incorporate the new AI/Data control plane and Foundational models into their infrastructure. Both the named vendors above have started to do this with Deep learning, Hyper-personalization, and other approaches. However, the innovations they promote and have created are still highly valuable and have not changed the basics of Marketing 101 regarding segmentation, personalization, and highly coveted 1:1 conversations with customers.

These two vendors are the benchmark vs. the laggards. Any new vendor should take heed of what these two have accomplished. There is no CDP out there that can match what they have done regarding how personalization should work - both multichannel and now Omnichannel. Make sure you challenge any CDP vendor's Personalization message or approach with deep due diligence. As mentioned above, I call this an AI Control Plan that feeds into a Personalization Control plane.

What does the Rich Relevance personalization data flow look like:

Dynamic Yield and others are not much different per the illustration. Some, vendors, like Adobe or Optimizely, are much simpler and not as heavy on the model selection ensemble process or abilities. There is a range of vendors who are more AI/ML 1:1 focused and others that started as more as A/B testing tools and are light on their model choices or even more specialized in areas like Search and product discovery like Certona or Algolia.

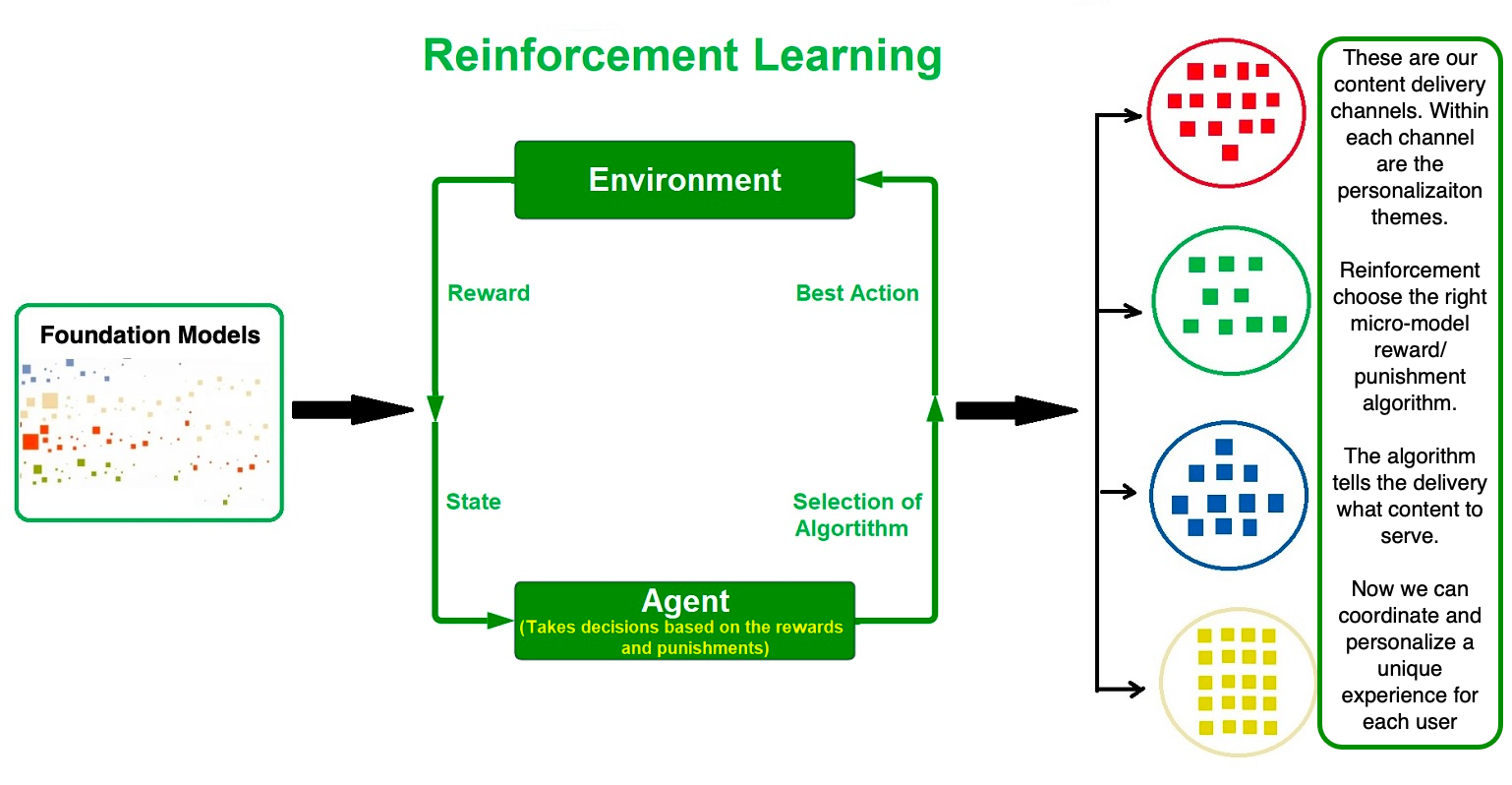

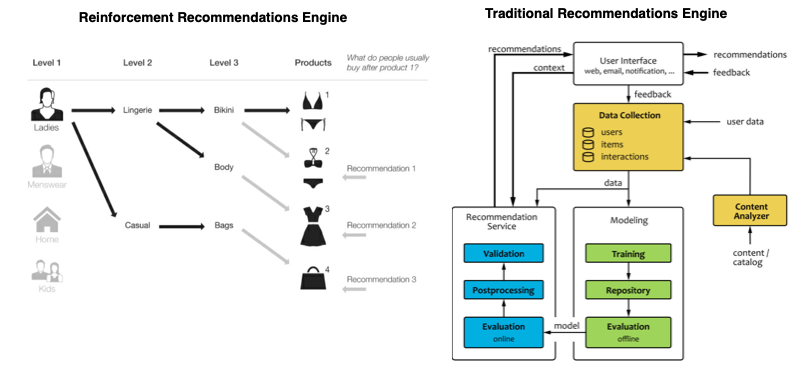

While the AI data control plane and foundational models provide a robust base, a natural progression integrates a newer approach towards reinforcement learning (RL) as the new downstream personalization engine - it could replace the older ensemble model library approach. This is essential for several reasons. Firstly, with the power of A.I., an organization can customize the UI for every user using RL, ensuring their app or website experience is like no other - traditional web shopping, mobile apps, and service apps can be completely customized on the fly without managing individual model libraries and rules for each themed strategy.

As users navigate the channel experience, the interface intuitively molds itself, adapting in real-time to mirror their preferences and behaviors. But what makes RL stand out as the next pivotal phase is its innate ability to evolve with each interaction. With every click a user makes, state-of-the-art machine learning models, underpinned by Reinforcement Learning, spring into action. In other words, the Reinforcement Learning model houses the library of smaller use-case strategies. Now each underlying micro-model per se is a proactive learner, gleaning insights and constantly refining their understanding. This continuous cycle of learning and adaptation aims to optimize for any target metric a business may prioritize, be it retention, revenue, or lifetime value (LTV). In essence, while the AI data control plane sets the stage, reinforcement learning brings dynamic, real-time personalization to life, making it an indispensable cog in the machinery of contemporary AI-driven user experiences. There are a few entries to this genre. One notable new vendor is https://flowrl.ai/.

Coordination of a Robust Personalization Strategy

To achieve personalization, it's not enough just to have a single theme at one touch point or even a little data tied to one model. It's essential to understand the numerous elements that can be personalized and how they fit into the overall micro-model strategy library where the right strategy model can be chosen within the reinforcement engine and that the user's context can span omnichannel campaign initiatives across all delivery solutions in the stack.

While a legacy personalization engine could power a few channels, it cannot power all of them in many or nearly all vendor cases. This deficiency has handicapped omnichannel initiatives and thus the idea of an A.I. control and data plane, and now with the addition or enhancement of a reinforcement learning engine, we populate micro-specialty strategies for the personalization themes. A more technical deeper dive into reinforcement learning can be read on Louis Kirsch's blog or just search on Google - there is a massive amount of illustrations.

In this approach, all the data modeled, trained, deployed, and shared comes from a centralized source, but that centralized foundation has access to dozens and, in some cases, hundreds of datasets. The process consumes the atomic level of data and coalesces it into a central Foundation (aka the LLMs), then feeds it into the reinforcement engine, parsing it back into an atomic level for specialty use cases. It uses defined algorithms in the reinforcement engine for assembling the content for accuracy at the touch point. My friend, Eric Best, talks about this atomic data concept at a high level for his product Soundcommerce. These atomic datasets could all be used to populate the foundation model, and the AI Data control Plane would be the tool to help orchestrate it. Personalization is a bit different, but from a data management approach, the concept is similar. Here is an illustration to help visualize the concept.

To provide an integrated omnichannel approach using a coordinated example for each personalization approach, let's continue to use an example of a sportswear retailer – "SportsFlex."

Let's dig in, get further downstream, and build on our themes. Below are themes built to coordinate with the customer journey vs stand-alone, as previously mentioned in our examples. The Reinforcement learning engine decides our tactical touch-point personalization context and content to span a full journey. RL is our library now. Let's build an illustration of how Sportflex might use one or more channels for one of their customer profiles. We will use Jane as our example. It's simple, but we could update and modify the content and UI for every interaction with Jane. It's full 1:1, and Jane is only one profile in our universe of 60 million profiles.

Behavioral Strategy:

- Approach: Understand user behavior to customize aspects of their interaction.

- Illustration: Jane, a frequent buyer from SportsFlex, often checks out yoga and Pilates gear. When she logs in, her homepage highlights the latest yoga mats, Pilates balls, and offers a special discount on yoga pants, leading her directly to what she might be interested in.

Recommendation Strategy:

- Approach: Algorithms suggest items based on user patterns.

- Illustration: After Jane purchases a yoga mat, the website recommends a matching yoga mat bag, yoga block, and a strap. The mobile app also nudges her with similar suggestions the next time she opens it.

This endpoint could be one further step fed by the reinforcement engine or standalone. Recommendations are a bit different in approach, therefore could be an endpoint Specialty engine populated by the behavior above but originated from the Foundational or Reinforcement models - this is all instant and real-time within the specialty model and could even get triggered or updated depending on the platform specs. from the Foundation in real-time or batch.

Search and Discovery Strategy:

- Approach: Enhance search results based on personal preferences.

- Illustration: When Jane searches for "shoes" on the website, the results prioritize yoga shoes or slip-resistant socks, given her previous purchases, ensuring she finds relevant products faster.

Offline to Online Strategy:

- Approach: Combine offline and online data for a 360-degree customer view.

- Illustration: Jane recently bought a sports bra from a physical SportsFlex store. The next time she logs into the website, she sees suggestions for matching leggings or washing instructions for the product she bought in-store.

Region and Segment-based Strategies:

- Approach: Customize offers/content based on the user's location or demographic.

- Illustration: Jane, who is from Miami, logs into her account and sees a promotion for summer-friendly yoga wear suitable for Miami's weather. Simultaneously, a user from Seattle might see rainproof running gear.

Content Strategies:

- Approach: Deliver content tailored to individual preferences.

- Illustration: In the blog section of SportsFlex, Jane finds articles like "10 Yoga Routines for Morning Flexibility" or "The Best Yoga Spots in Miami", making her stay longer on the site and engage with the brand.

Email Strategies:

- Approach: Personalized email content based on user behavior and preferences.

- Illustration: A week after placing her order, Jane receives an email titled, "How to Care for Your New Yoga Mat, Jane!" The email contains care instructions, some beginner yoga poses she can try, and a coupon for her next purchase.

These barely scratch the surface of potential and possibility. I am being illustrative. Most people who know me, know I have much more detail around all these approaches and ideas.

By employing these personalized strategies across different channels in a coordinated manner, "SportsFlex" provides Jane with a seamless and tailored shopping experience. Whether she's in-store, on the website, mobile app, or reading her emails, every touchpoint feels custom-made for her, enhancing her loyalty and engagement with the brand. The true essence of omnichannel personalization is this consistent, personalized experience across all touchpoints, making the customer feel recognized and valued. This is great, but we need to marry the approach to a campaign. The campaigns can be their own strategies but, in most cases, are over-arching initiatives based on the customers' behaviors, so we craft a THEME into the abovementioned strategies.

Challenges and Considerations

While personalization offers numerous benefits, it's not without its challenges. Data privacy concerns are paramount. Marketers must ensure they're compliant with data protection regulations and that customers understand how their data is being used. Furthermore, achieving personalization at scale requires significant technological infrastructure and expertise. The biggest bottleneck is not the technology, models, infrastructure, or data. It is the creative and content creation and having enough variations to keep up with all campaigns, products, messages, and experiences the brand wants to convey. If you have this last step for the creative content, your omnichannel initiative will succeed.

The power of data workflows in omnichannel personalization also cannot be overstated. With the right strategies, tools, and insights, marketers can craft campaigns that resonate deeply with their audience, fostering loyalty and driving conversions. Do not forget this point. It is becoming increasingly critical, especially with cookie-less marketing initiatives and all the privacy protections we have worldwide today. Eventually – if it were my dream, the USER would control their profile ID and grant the marketer permission - potentially eliminating the CDP concept we know today. The consumer would release only profile data they want to ABC Corp or XYZ Corp. They could have two or more different identities based on trust. Once this happens, the CDP's role takes on a completely different role, and in fact, there may not be a need for a true CDP we see today. Each user owns their own CDP profile for each and every brand - creating and controlling multiple ID avatars and whatever attributes they release to feed the Foundation and reinforcement models for the personalization strategies noted above. Let's get more detailed to understand what these CONTROL PLANES might look like.

A.I. Control and Data Planes: Resilient Personalization Data

Let's jump in a bit deeper. As the marketing Omnichannel landscape evolves, the need for an efficient AI data workflow becomes even more pronounced. The pipeline to achieve true personalization is intricate, demanding keen attention to detail at every stage.

Today most AI and ML models and capabilities sit to the far right within the campaign delivery and analytics tools or one step removed from them in a separate platform or sub-platform that runs a type of ML Ops to provide better audiences for macro or micro segments associated with and tied to campaign initiatives.

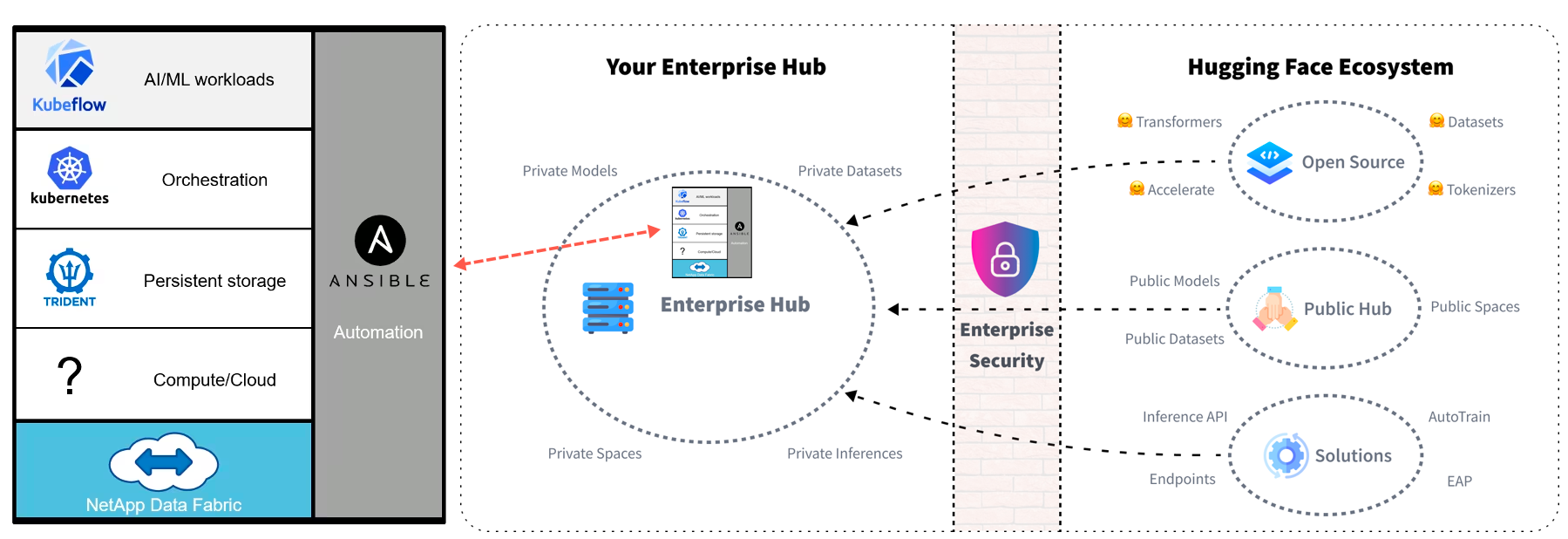

Adobe named its sub-platform Sensei, and Salesforce called its sub-platform Einstein. There are many more like this we have seen and heard of or used. If we take a step further, we could even say Siri or Alexa, but these are not control planes for AI and ML, like what Adobe or Salesforce are pitching and proposing to their clients. If we look at ML-Ops, the AI data Control plane would sit at the ML-Ops sub-system level. While Adobe and Salesforce attempted to create a new abstraction for Data science and ML-Ops. What I am suggesting with the Foundation models and the Reinforcement engine specialty models is that we need a layer to help converge, manage, and leverage the data in a different way that is abstracted into even simpler UI/UX that the business can use. As I mentioned, HuggingFace, H2O, and C3.ai all seem to be moving in this direction. Here is what current ML-Ops looks like per the ML-Ops.org site. No business team or marketing team is going to even think about or consider using it – It is not abstracted enough or reframed on how they could use it to get value.

The future should look much different. Corporations need a layer on top of the current ML Ops layer - that helps them gain control of the FOUNDATIONAL MODEL LAYER. This will ensure AI tools can clean and govern better data and feed the downstream Reinforcement Personalization engine, customize that engine per line of business, and potentially create a marketplace of specialized models for each line of business dept. so they can hone and optimize the campaigns and experiences they deploy. This idea was illustrated at the top of the article in the diagram at a high level of abstraction.

The two resources referenced below talk about this concept a bit more - not quite how I am addressing it but they both gave me the concept idea. CDP vendors must address this going forward as they embed the foundational models into their platforms. https://medium.com/@hzmarrou/embracing-the-future-of-enterprise-ai-with-foundation-models-i-f5bc659acf3e and NumberStation.ai - Foundation Models in the Modern Data Stack - I illustrated many of the concepts above in the diagrams.

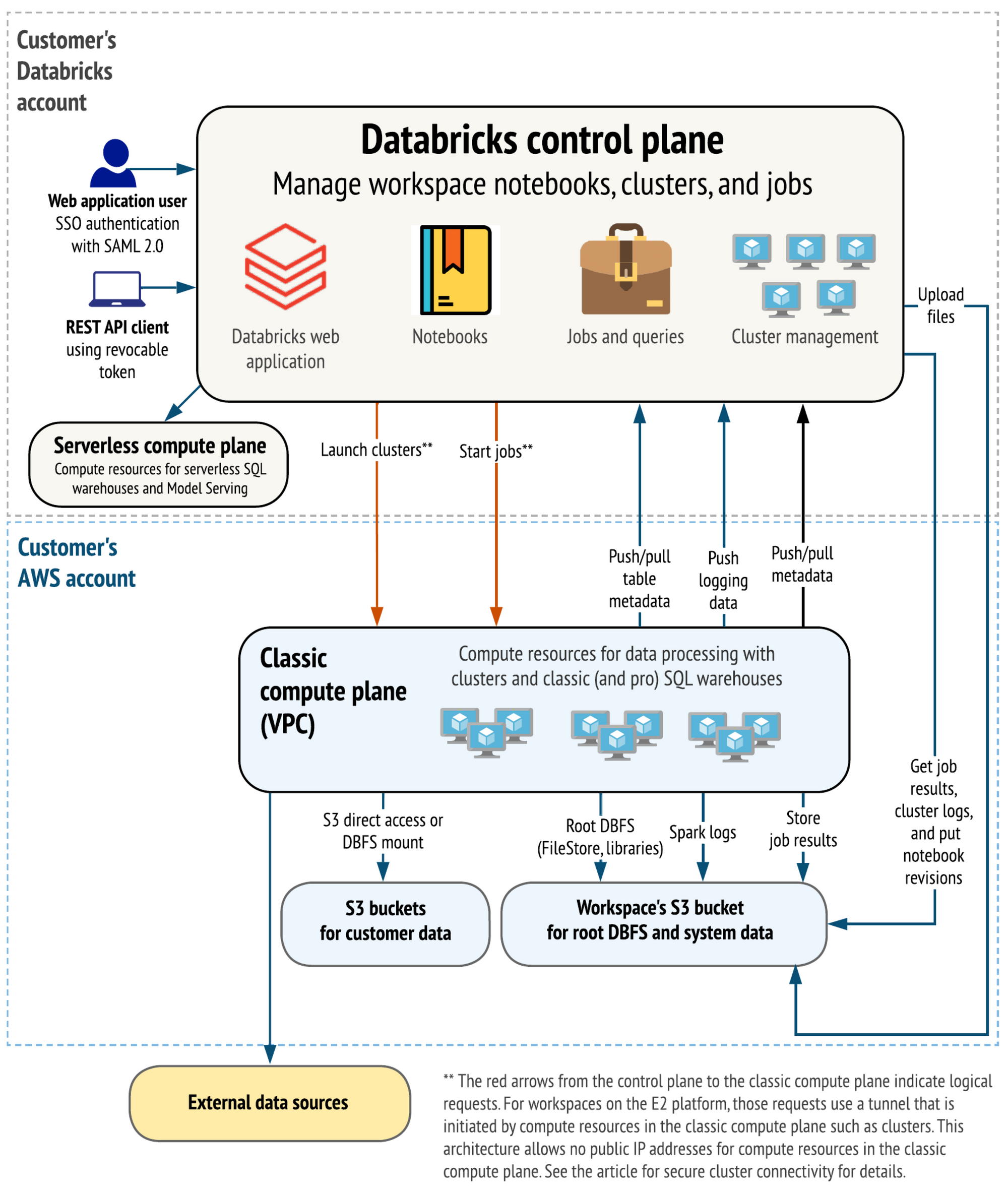

Databricks and many underlying infrastructure tools like HuggingFace.co - Enterprise hub, C3.Ai, H2o.ai, KubeFlow, and NetApp actually name their approach an AI Control Plane, but it is still too cumbersome. Here is an illustration where eI combined NetApp with HuggingFace's Enterprise Hub to create a combined AI Data Control plane – There would need to be a different UI/UX abstraction sitting on top of this reference.

Databricks and potentially Snowflake are closer to this idea and could re-skin or add better UI/UX abstractions to help the line of business depts. across an entire organization.

More detail on Control Planes: The concepts of "control planes" and "data planes" have been adapted to various IT infrastructures, including those used in AI and machine learning. In cloud computing and networking, a control plane is responsible for routing and managing traffic, while a data plane handles the actual traffic or data itself. When applied to AI, these concepts get a unique spin. These tools and ideas sit on top of the actual ML Ops process, a separate function where the Data scientists model, simulate, train, and then deploy the models that use the AI control below.

The AI Control Plane: Right now, it is very technical and ML-Ops-centered. The new AI control Plane would manage, orchestrate, and control the various foundational models, workflows, and processes in an AI environment. The goal is to simplify business context and abstract it for the line of business dept where it can call into the more complex processes in an automated workflow or CI/CD. It could also handle model versioning, monitoring, governance, and scaling - this would be akin to a model manager - Companies like SAS invented that concept. All of this creates more data trust within each line of business. Key components might include:

- Model Management: Overseeing versions of models, and ensuring they're up-to-date and relevant.

- Model Monitoring: Observing models in production to ensure they're performing as expected and not drifting from their intended outputs.

- Workflow Automation: Ensuring data ingestion, preprocessing, model training, validation, and deployment happen seamlessly.

- Governance and Compliance: Managing user permissions, ensuring data privacy, and meeting regulatory requirements.

AI Data Plane: This would handle the data that flows into and out of the AI systems. Given that AI and ML models are highly dependent on data, ensuring that data flows efficiently and in a structured manner is essential. This could involve:

- Data Ingestion: Extracting data from diverse sources and bringing it into the AI environment.

- Data Processing: Transforming and cleaning the data to make it suitable for training.

- Model Serving: Once trained, models must serve predictions in real-time or batch modes. This involves feeding new data into the models and getting outputs.

- Feedback Loops: Using the output from models to refine and retrain them, especially in the context of reinforcement learning or any system where model predictions can influence future data.

Tools that serve as AI control planes often provide orchestration, automation, and management capabilities for machine learning workflows. Platforms like MLflow, Kubeflow, and TFX (TensorFlow Extended), Databricks, offer features that can be likened to a control plane for AI, helping data scientists and ML engineers streamline and manage their machine learning projects from experimentation to production.

Again, the idea with both of these is to simplify them and abstract them up to the line of business depts. and then span them across the entire organization.

Remember, while the terminologies of "control plane" and "data plane" are borrowed from other domains, their applications in the AI context are still evolving. The idea is to have a clear separation between management and orchestration functions and the actual data processing and model execution functions, then abstract them up to the business vs. keeping it all buried.

The actually technical operations do not change, nor does the workload for CI/CD and DEVOPs – we are just giving better data transparency, usability, trust, credibility, and access to the entire organization. What the AI control and data planes will do is syndicate the data out to the Omnichannel data layer and hydrate the strategies supporting the marketer initiatives.

Crafting Genuine Connections in a Digital Age

To close out, at its heart, personalization is about making genuine connections. For the data, algorithms, and technologies, it's essential to remember that behind every data point is a human being, with emotions, desires, and aspirations. As marketers, we need to refine data workflows and strategies. We must strike the right balance between personalization and privacy, between automation and authenticity. Only then can brands create truly memorable experiences, fostering trust and building long-lasting relationships in this ever-evolving digital age.

I hope you all got some value from this article and it spurred ideas and concepts you can leverage in your products, initiatives, and marketing campaigns. As always, if you have comments or questions, please leave them here or DM privately via phone, text, email, or LinkedIn. Thank you for reading and having interest. Happy Halloween! Hopefully, this didn't scare you too much. haha.